In computer science, a data buffer (or just buffer) is a region of a memory used to temporarily store data while it is being moved from one place to another.

Thursday, 2 March 2023

Wednesday, 1 March 2023

About Node JS

What exactly is a node?

a connection point in a network that is a processing device with an assigned address, as a router, computer terminal, peripheral device, or mobile device:nodes on the internet;In a well-designed network, the failure of one computer node does not cause a failure in the network.

Who Uses Node.js?

Following is the link on github wiki containing an exhaustive list of projects, application and companies which are using Node.js. This list includes eBay, General Electric, GoDaddy, Microsoft, PayPal, Uber, Wikipins, Yahoo!, and Yammer to name a few.

Concepts

Where to Use Node.js?

Following are the areas where Node.js is proving itself as a perfect technology partner.

I/O bound Applications

Data Streaming Applications

Data Intensive Real-time Applications (DIRT)

JSON APIs based Applications

Single Page Applications

Where Not to Use Node.js?

It is not advisable to use Node.js for CPU intensive applications.

Source : https://www.tutorialspoint.com/nodejs/nodejs_introduction.htm

Tuesday, 21 February 2023

What actually Dropshipping

What is dropshipping?

Dropshipping allows you to sell products online without having to worry about storage, fulfillment, or shipping. Instead, your supplier or manufacturer ships the product directly to your customer once an order is received. Meaning, you don’t ever have to touch the product or worry about managing a warehouse.

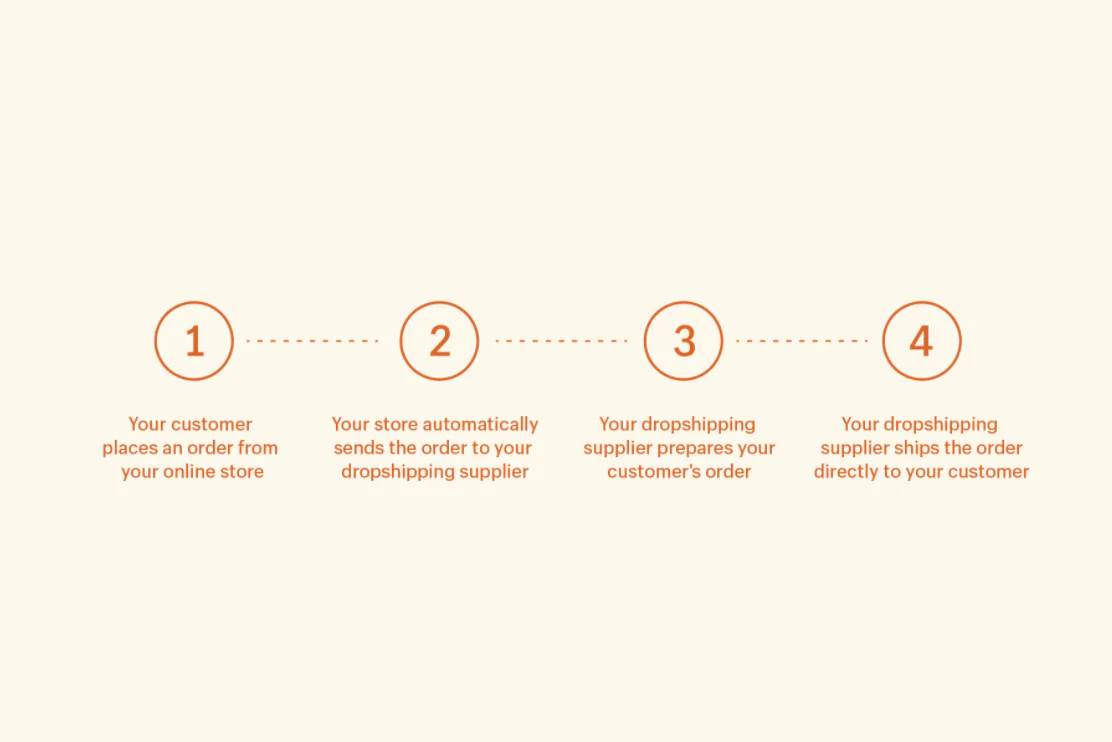

How does dropshipping work?

The dropshipping process is essentially a relationship between a customer-facing store and a supplier.

There are two common approaches to adopting a dropshipping business model. The first is to seek out one or more wholesale suppliers located in North America (or anywhere else in the world) on your own using a supplier database. Examples of popular online supplier databases include AliExpress, SaleHoo, and Worldwide Brands.

If you’re not interested in finding suppliers for all of the products you plan to sell, you can use an app that connects you and your store to thousands of suppliers. For this, we recommend DSers, a Shopify app that helps independent business owners find products to sell.

With DSers, you can browse AliExpress and import the products that pique your interest directly to DSers—which is connected to your Shopify store—with the click of a button. Once a customer buys a product, you’ll be able to fulfill their order in the DSers app.

Fortunately, DSers automates most of the dropshipping process. As the store owner, all you have to do is check that the details are correct and click the order button. The product is then shipped directly from the AliExpress supplier to the customer—wherever in the world they may be.

Monday, 13 February 2023

About Web Scraping

What is Web Scraping?

Web scraping is an automatic method to obtain large amounts of data from websites. Most of this data is unstructured data in an HTML format which is then converted into structured data in a spreadsheet or a database so that it can be used in various applications. There are many different ways to perform web scraping to obtain data from websites. These include using online services, particular API’s or even creating your code for web scraping from scratch. Many large websites, like Google, Twitter, Facebook, StackOverflow, etc. have API’s that allow you to access their data in a structured format. This is the best option, but there are other sites that don’t allow users to access large amounts of data in a structured form or they are simply not that technologically advanced. In that situation, it’s best to use Web Scraping to scrape the website for data.

Web scraping requires two parts, namely the crawler and the scraper. The crawler is an artificial intelligence algorithm that browses the web to search for the particular data required by following the links across the internet. The scraper, on the other hand, is a specific tool created to extract data from the website. The design of the scraper can vary greatly according to the complexity and scope of the project so that it can quickly and accurately extract the data.

How Web Scrapers Work?

Web Scrapers can extract all the data on particular sites or the specific data that a user wants. Ideally, it’s best if you specify the data you want so that the web scraper only extracts that data quickly. For example, you might want to scrape an Amazon page for the types of juicers available, but you might only want the data about the models of different juicers and not the customer reviews.

So, when a web scraper needs to scrape a site, first the URLs are provided. Then it loads all the HTML code for those sites and a more advanced scraper might even extract all the CSS and Javascript elements as well. Then the scraper obtains the required data from this HTML code and outputs this data in the format specified by the user. Mostly, this is in the form of an Excel spreadsheet or a CSV file, but the data can also be saved in other formats, such as a JSON file.

Different Types of Web Scrapers

Web Scrapers can be divided on the basis of many different criteria, including Self-built or Pre-built Web Scrapers, Browser extension or Software Web Scrapers, and Cloud or Local Web Scrapers.

You can have Self-built Web Scrapers but that requires advanced knowledge of programming. And if you want more features in your Web Scraper, then you need even more knowledge. On the other hand, pre-built Web Scrapers are previously created scrapers that you can download and run easily. These also have more advanced options that you can customize.

Browser extensions Web Scrapers are extensions that can be added to your browser. These are easy to run as they are integrated with your browser, but at the same time, they are also limited because of this. Any advanced features that are outside the scope of your browser are impossible to run on Browser extension Web Scrapers. But Software Web Scrapers don’t have these limitations as they can be downloaded and installed on your computer. These are more complex than Browser web scrapers, but they also have advanced features that are not limited by the scope of your browser.

Cloud Web Scrapers run on the cloud, which is an off-site server mostly provided by the company that you buy the scraper from. These allow your computer to focus on other tasks as the computer resources are not required to scrape data from websites. Local Web Scrapers, on the other hand, run on your computer using local resources. So, if the Web scrapers require more CPU or RAM, then your computer will become slow and not be able to perform other tasks.

Why is Python a popular programming language for Web Scraping?

Python seems to be in fashion these days! It is the most popular language for web scraping as it can handle most of the processes easily. It also has a variety of libraries that were created specifically for Web Scraping. Scrapy is a very popular open-source web crawling framework that is written in Python. It is ideal for web scraping as well as extracting data using APIs. Beautiful soup is another Python library that is highly suitable for Web Scraping. It creates a parse tree that can be used to extract data from HTML on a website. Beautiful soup also has multiple features for navigation, searching, and modifying these parse trees.

What is Web Scraping used for?

Web Scraping has multiple applications across various industries. Let’s check out some of these now!

1. Price Monitoring

Web Scraping can be used by companies to scrap the product data for their products and competing products as well to see how it impacts their pricing strategies. Companies can use this data to fix the optimal pricing for their products so that they can obtain maximum revenue.

2. Market Research

Web scraping can be used for market research by companies. High-quality web scraped data obtained in large volumes can be very helpful for companies in analyzing consumer trends and understanding which direction the company should move in the future.

3. News Monitoring

Web scraping news sites can provide detailed reports on the current news to a company. This is even more essential for companies that are frequently in the news or that depend on daily news for their day-to-day functioning. After all, news reports can make or break a company in a single day!

4. Sentiment Analysis

If companies want to understand the general sentiment for their products among their consumers, then Sentiment Analysis is a must. Companies can use web scraping to collect data from social media websites such as Facebook and Twitter as to what the general sentiment about their products is. This will help them in creating products that people desire and moving ahead of their competition.

5. Email Marketing

Companies can also use Web scraping for email marketing. They can collect Email ID’s from various sites using web scraping and then send bulk promotional and marketing Emails to all the people owning these Email ID’s.

Source : https://www.geeksforgeeks.org/what-is-web-scraping-and-how-to-use-it/

Wednesday, 25 January 2023

B2B is?

What Is Business-to-Business (B2B)?

Business-to-business (B2B), also called B-to-B, is a form of transaction between businesses, such as one involving a manufacturer and wholesaler, or a wholesaler and a retailer. Business-to-business refers to business that is conducted between companies, rather than between a company and individual consumer. Business-to-business stands in contrast to business-to-consumer (B2C) and business-to-government (B2G) transactions.

Source : https://www.investopedia.com/terms/b/btob.asp